Semester B Final Report 2013 - Cipher cracking

Contents

- 1 Executive Summary

- 2 Introduction

- 3 Review of 2012 Statistical Analysis

- 4 2013 Statistical Analysis

- 5 Decoding Toolkit - Matcher

- 6 Decoding Toolkit - One Time Pad

- 7 Decoding Toolkit - Testing

- 8 Cypher GUI Extension

- 9 The Rubaiyat Matcher - Matching Frequency

- 10 Mass Spectrometry - The Somerton Man's Hair

- 11 Future Work

- 12 Project Management

- 13 Project Deliverable and Closeout

- 14 Conclusion and Summary

- 15 See also

- 16 References and useful resources

- 17 Back

Executive Summary

The 2013 University of Adelaide Cipher Cracking Team, Lucy Griffith and Peter Varsos, investigated into the mystery of the Somerton Man and the secret uncracked code related to his case. The team worked several different aspects of the project, both extending work done by previous groups and taking on new tasks. The different tasks undertaken in 2013 were as follows:

- Extending the analyses done by the 2012 team on the frequency of letters in different languages.

- Attempting to use the Rubaiyat of Omar Khayyam as a One Time Pad key to decipher the code.

- Creating a Decoding Toolkit containing useful frequency analysis, matching and One Time Pad related tools in one place.

- Implementing cipher systems used in the 1940's in the Cipher GUI.

- Analysis of mass spectrometry data of the Somerton Man's hair.

- Creation of documentation and videos regarding the use of the toolkit.

The completion of the 2013 project allowed several conclusions to be drawn:

- The English Language statistically fits the best for the code assuming it is the initial letters of words in a sentence or list.

- The Rubaiyat of Omar Khayyam was not used as a straight substitution One Time Pad encryption for the code.

- Interesting information about the weeks before the man's death can be drawn from the man's hair.

As well as the conclusions drawn, several tools have been created to aid future endeavors in cracking the case. Additions to the ciphers available in the Cipher GUI that are actually possible to have been used in the time period have been made. A toolkit containing various matching, decoding and various string handling methods has been created for future use.

Project timelines have been followed and met, as well as the budget being managed. The goals set at the start of the project have been met, and at the time of project closeout the team is satisfied with the results that have been obtained.

Introduction

Background

The Case

Known as the Taman Shud Case the discovery of an unknown deceased man on Somerton Beach Adelaide (subsequently known as the Somerton Man) in 1948 remains one of Australia’s most baffling mysteries. Initially the case seemed relatively run of the mill suicide. The South Australian Police tried to identify the man first by using the clues available to them; the train and bus tickets found on the man led them to a suitcase stored in the Adelaide Railway Station but the man had no formal identification on him. Then the Police released his photo to the papers and his fingerprints to the army to check their records but there was still no positive identification. The body had to be embalmed and later a plaster cast was made of the victim’s bust so that the body could be interred. Six months after the discovery of the body, in a review of the evidence, a tiny piece of rolled paper was found in a pocket of the man’s clothing. This piece of paper contained the words Tamam Shud. Police, with assistance from the Library of South Australia, concluded that this scrap of paper had come from a book of Persian Poetry translated into English. Originally written by the Persian Philosopher Omar Khayyam the test was titled The Rubaiyat of Omar Khayyam and was translated by Edward Fitzgerald. A photograph released to the media of the scrap of paper led a Glenelg man to submit a copy of this very book for evidence. The book was a rare first edition and had been found on the backseat of a car parked along Jetty Road two weeks before the body was discovered. Police verified that the scrap had come from this very copy of the book and here the mystery deepens. Scrawled inside the back cover of the book were five lines of nonsensical letters and a telephone number. The phone number led Police to a local woman who denied any knowledge of the man or this copy of the book. The five lines of letters are the interesting part. These lines are believed by many to be an encrypted message written by the dead man. To this day the man has never been identified nor the message decrypted. Unfortunately the original copy of the book was lost in the 1950s. In 2013 our team was lucky enough to have access to a scanned copy of a first edition of The Rubaiyat of Omar Khayyam, the same edition connected to the Taman Shud Case.

The Code

WRGOABABD

MLIAOI

WTBIMPANETP

MLIABOAIAQC

ITTMTSAMSTGAB

Five lines of letters from the Roman Alphabet were found scribbled in the back cover of a copy of The Rubiyat of Omar Khayyam connected with the Taman Shud case. Many people believe that it is an encoded message but no one has been able to decode it. The second line seems to have been struck out. This makes sense since the second and third lines are similar so it is believed that the second line is an incorrect version of part of the third. For some of the letters it is unclear what the letters are; M or W, I or L. Much of the final year project work has focussed on this code.

Previous Studies

In addition to the initial South Australian Police investigation there have been many other studies done on the Taman Shud Case. The most notable attempt at cracking the code was made by the Australian Defence Force in 1978. Unfortunately the ADF concluded that the encoded message was insufficient in length to find a pattern and crack it. This has led our predecessors at the University of Adelaide to pursue a test and discount method for the code. Since 2009 there has been final year project teams studying the Taman Shud Case applying an engineering approach to cracking the code and attempting to discover the identity of the Somerton Man. In addition to trying many cipher systems on the code the project teams at Adelaide have produced software to encode messages and show analysis of the code, to trawl the internet for phrases and scanned the plaster bust of the victim in 3D. Despite the investigations of this case the identity of the Somerton Man remains a mystery.

Project Objectives

The objectives for 2013 were as follows:

- Review and extend the 2012 analysis of letter frequency in different languages.

- Create a useful software toolkit containing functions that will be used for this analysis, as well as One Time Pad decryption functions.

- Attempt to decipher the code using the Rubaiyat as a One Time Pad key.

- Extend the Cipher GUI to include ciphers used particularly in the 1940’s.

- Analyse the mass spectrometry data to draw some conclusions about the Somerton Man’s life in the time before his death.

This report will contain information about each of these modules including motivation, completion and results obtained.

Review of 2012 Statistical Analysis

In 2012 the honours project group conducted a frequency analysis of the letters of the Roman Alphabet in over 80 different languages. They analysed the relative frequency of the all letters and words beginning with each of the letter of the Roman alphabet. A single translated passage from the bible was used for this analysis. The 2012 team also did a second analysis of English separately using the Oxford English Dictionary. They compared the results from these analyses with the code from the Somerton Man Case. I have conducted a review of this analysis to identify possible extensions to the analysis for 2013.

The 2012 team conducted a wide analysis of many different languages. The text used for this analysis was a short translated passage from the bible, around 250 words long. This was not a large sample size, only around ten times the length of the alphabet itself. In addition the source of the text meant that there were many shared words between the languages (biblical names etc.). This has potentially skewed the data as those words may not have originally been from the language being analysed. I find little merit in this portion of the analysis undertaken by the 2012 group.

The analysis of the first letters of words in the Oxford English Dictionary is also not very useful, in my opinion. This text would contain many obscure and uncommon words so it does not represent the English language commonly used. This means that it gives little either way to the theory that the Somerton Code is an acronym of a sentence or the first letters from words in a list.

The 2012 team did come to the same conclusion as the groups before them: the language most likely used as an initial letter encoding for the Somerton Code was English.

2013 Statistical Analysis

In extension to the 2012 analysis it was proposed that the 2013 team, Peter Varsos and Lucy Griffith, conduct several new analyses. The first assumption we made for these analyses was that the Somerton code is not an anagram (in any language). Following this we conducted the analyses on the initial letters of words in much longer and less biased texts.

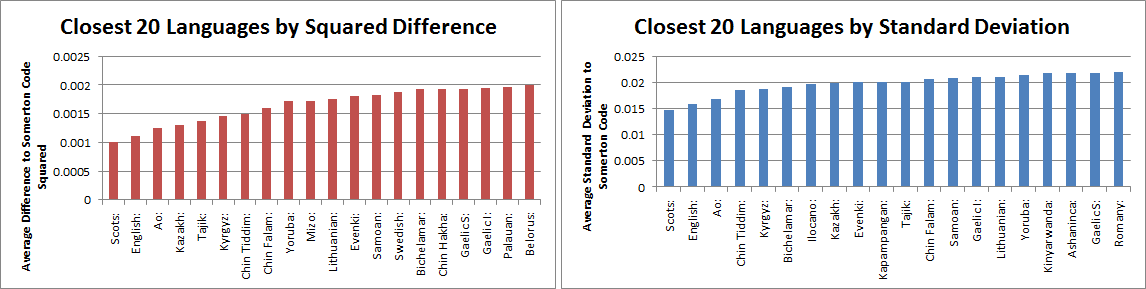

Language Analysis: Translations and Transliterations of the Universal Declaration of Human Rights into 268 Languages

Transliteration of a text is written phonetically with a different alphabet. In this case the Roman Alphabet was used. The Universal Declaration of Human Rights was available in over 400 languages online (see references). Of these translations many were paper scans and thus were too difficult to put into a text file for analysis. In the end 266 languages were analysed. This text was chosen because of the lack of names of people and places and because it was between 900 and 2500 words in each language (significantly longer than the texts used in 2012).

Several analyses done using this text. First the languages were analysed only counting the natural letters of the Roman Alphabet (those without an accent). The frequency of initial letters in each language was then compared with the frequency of letters in the Somerton Code (similar to 2012). We then altered our method slightly by also counting letters with an accent. This accommodates the possibility that some of the letters of the Somerton Code could be an accented letter with the accent removed for extra secrecy or some other reason. This did no change the results much. So we continued with the more inclusive analysis.

Then the languages were analysed using slightly different versions of the Somerton Code. The version of the code used at first included six letter M and zero letter W. This was the same as was used in 2012. The Analysis was then redone using a code with zero M and six W and then again with the code as it appears in the original police report from 1949 (this is five M and one W). This did not alter the results drastically. This confirmed that the analysis we conducted was quite robust.

Finally we graphed the top 20 European languages from the list. This was done with the analysis conducted using the Code as from the police report and including accented letters.

In the end the conclusion was the same as our predecessors; the most likely language is English if the code were the initial letters of words in a sentence or a list. This of course discounts the fact that in all our analyses Scots (Scottish) was number 1 consistently. We decided that, since Scots and English are very similar (in terms of initial letters), it was too close to distinguish. We defaulted to English because it was a more widely used language than Scots (and still is).

English Control

In order to back up our conclusion of English as the most likely language for the Somerton Code as a series of initial letters we decided to run the same analysis of all 266 languages using a control code from a known English text. For convenience we chose to use the Rubaiyat of Omar Khayyam a our control code source text. We used the first 44 words of the poetry book to keep our control code the same length as the Somerton Code. The result was surprising. It can be seen from the graph of these results that the two languages most closely related to the control code are Scots and English. This serves to support the assumption we made that Scots is so similar to English (in initial letters of words) that we can safely conclude English as our most likely language. It also supports the conclusion from the previous analysis.

We can now say with more conviction than English was the most likely language of origin provided that the Somerton Code was a sequence of initial letters of words in a sentence or list.

Genre Analysis: English Books Analysed by Genre

In the assumption that the language used was English the 2013 team wished to find out if there was a particular subject area which lent itself more to words starting with the letters in the Somerton Code. In this analysis several texts more than 100 years old were analysed by genre and graphed. All of the texts came quite close to the squared difference and standard deviation of the English version of the UDHR. This made sense since it was all the same language and it also reaffirmed the results from the previous analysis.

The highest correlation came from the genre of Gastronomy, this included house keeping and other similar subjects. This is interesting because this genre would contain the most common household words. This would suggest that the mysterious encoded message in the Tamam Shud Case could be nothing more than a condensed list of chores or shopping.

The conclusions we can draw from this are few, even if testing for correlation between genres is an interesting idea. We found that the Rubaiyat is just too small for there to be a detailed test compared to some of the other source material books. We also decided that, barring a huge obvious outlier, it was really too difficult to say how much of a deviation was statistically significant to the point that it warranted extra looking into. We therefore put this small analysis as a note of interest rather than a large section.

Decoding Toolkit - Matcher

A .rar file containing the final matching toolkit is available here: File:DecodingToolkit.rar

Concept

With the correct edition of the Rubaiyat available to us for the first time and its supposed connection to the case, it is important that we begin to do the basic analysis of how it could be involved as it could trivially lead us somewhere. A software suite allowing us to get the first letters of every word in any plaintext document such as a plaintext version of the Rubaiyat and check for the letters from the secret code within it will greatly increase efficiency of testing down the line. With the previous groups findings that the code being the first letters of words is likely, this software will allow us to check this against the Rubaiyat and against any other source we find later on. Secondly, later work on the code will require working word matching software and so building it for this will allow us to extend it later. In particular, the one time pad software will generate many decrypts that will need to be searched through, which an expanded version of this will allow. This needs to be taken into account in the design, all of the code for the first letter analysis will be kept separate and the rest kept very modular to allow other methods to plug into it later.

Design

So from this concept came a fairly simple design process. A GUI was created around a central program, with handling for readers and writers pointing to several text documents which allows the toolkit to run on any plaintext document. Each of the seperate functions added to the toolkit would be passed the information from the text documents by the central program, and potentially pass it back to be written back to plaintext.

Therefore as the project continued, the toolkit expanded and 'grew' as new functions were added to it, all behaving the same way and running independently of each other. At first various string handling functions were created, then matching algorithms and finally the one time pad tools were extended onto the toolkit. As each of these simply took its inputs and wrote its outputs to text documents, it is possible to do several of these functions in a row on a plaintext document to very quickly do complicated functions very easily (as an example: take a text document, format it, take the first letters only, do a one time pad decrypt from another text document and then run a search algorithm in four clicks!). This made future parts much easier than doing these processes manually.

Implementation

The letter matching part of this software has several functions. It contains several small methods by which a block of plaintext can be converted into a string of the first character of every word. The main part of the software will allow another small string to be checked against this one in the hope of finding ‘matches’, the contents of the string within the other.

The first implementation simply checked to find if the contents of the string were within the larger one, however this is obviously only going to give us a very shallow way of checking. Therefore even before looking at possible extensions to the first version it was necessary to make a few different types of matching methods and implement them in parallel in the hope that one will give us what we were looking for. A very simple way of doing this was to check for partial matches rather than only full ones - which could be implemented trivially as it can be done exhaustively. There is the potential to expand in this area later, as even with random data some partial matches will come up for single, double and even triple letters - setting it up to work these out and looking for a certain threshold where it becomes statistically significant could yield a very robust way of searching for this.

Also implemented was a wildcard match. One of the big problems when looking at the code is the possibility that one or more of the letters could have even been copied down wrong (the handwriting wasn't the best!) which makes any kind of statistical analysis kind of pointless as it could all be missing one important thing. Therefore, a very simple method which does the above matching while ignoring each letter in the code iteratively was designed. This could easily be extended later too, to account for more letters and to also do a combined match with the above partial matching method. A proper combination of the two could be very worthwhile if we deem it worth the time to go down this path later - but would be unlikely to yield results for this particular example and so it will be left until then.

Finally it is worth mentioning the possibility of extending this specific example to other text. We were able to come by a different translation of the Rubaiyat to see if it would give us a better result, and should any other possibilities come up later we can try this.

Decoding Toolkit - One Time Pad

Concept

The one time pad software is designed to read a key and a code from two text files, and write to another file a list of all possible decrypts of that code using every possible one time pad combination of letters throughout the key document. It must also give the option to 'shortlist' or search this massive list of decrypts, and sort them in such a way that the decrypts that are more likely to be of interest are at the top.

Requirements

- Read in from a buffer containing two separate text documents, the key and the code.

- Be able to generate a translation key in an array from any given starting point in the key document.

- Iterate through the document creating such a key for every possible output.

- Use said keys to create a decrypt, output these in another text document.

- Allow on the spot searching of these decrypts.

- A method by which a 'rating' can be given to these decrypts, which accurately rates its likelihood to be English rather than gibberish.

- Creation of 'debug mode', a tool to allow ease of debugging and verifying the code later.

- Sort these decrpyts by their rating to allow ease of searching for a likely candidate.

- Creation of a GUI that is both easy to use and does not hide functionality.

- Creation of neat and modular code to allow possibility of later additions from other groups.

Design

Because of the complicated nature of this program, we had to be very careful with our design. In essence, since the program needs to be able to work with very large plaintext documents it necessitates it being transient - as in it simply reads and buffers from the two text documents, decodes, and prints the resultant decode to a third text document without storing anything in memory.

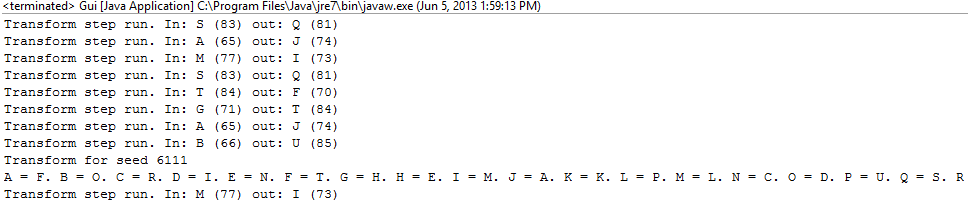

By using the modular GUI and matcher driver from the previous section - including the already designed buffers and readers - a lot of time was saved. The main problem then was the actual encryption methods itself.

A recursive solution was used. A method was designed which would read in from the key plaintext one letter at a time and create a transform array taking each new letter in order. This was called recursively with a seed allowing it to start from 1 letter forward each time, until it hit the end of the document. After this step the transform array was simply passed to a decrypt method which applied it (backwards, we are decrypting not encrpyting!) to the message and printed the solution to a new document. This generates a list of (tens of) thousands of decrypts for an average sized document such as the Rubaiyat, which need to be searched through.

A search and shortlist function were also designed. Again, this is simply modularly available from another button on the GUI and just reads from the filename that the decrypt method outputs to. The search function steps through this list of decrypts document buffering each line into a string, and search said strings for whatever word was typed into the box in the GUI.

The shortlist function simply takes each one and 'ranks' it depending on its likeliness to be an interesting string. In terms of design, we decided that being incredibly strict with these ranking criteria was really not needed, as it was fairly obvious very fast that the vast majority (usually all) of the decrypts were just going to be complete nonsense strings of random letters not even resembling English. These can be picked out very fast and a human is easily capable of quickly spotting a real string of words among a few hundred (but not tens of thousands). Therefore a points system was devised with three catagories - Very Strong, Strong, and Weak matches. It would bump a string containing any common words such as "and" or "the" right up into the very strong category immediately for a human to look at, as well as giving points to common English bigrams (pairs of common letters such as th, en) and common letters. It was tuned to an extent that out of the tens of thousands of decrpyts output by the Rubaiyat and message, a few hundred were very strong and around the top third were strong - a human could very easily look through this shortlist for anything of interest.

Implementation Process

- Design a solution for iterating through a large document to get a translation key.

- Design a method to decrypt a code using a given key.

- Implementation of a program using said designs.

- Research what attributes of a string make it more likely to be English

- Implementation of a search function using the decided upon approach.

- Test and Verification of Software

Results

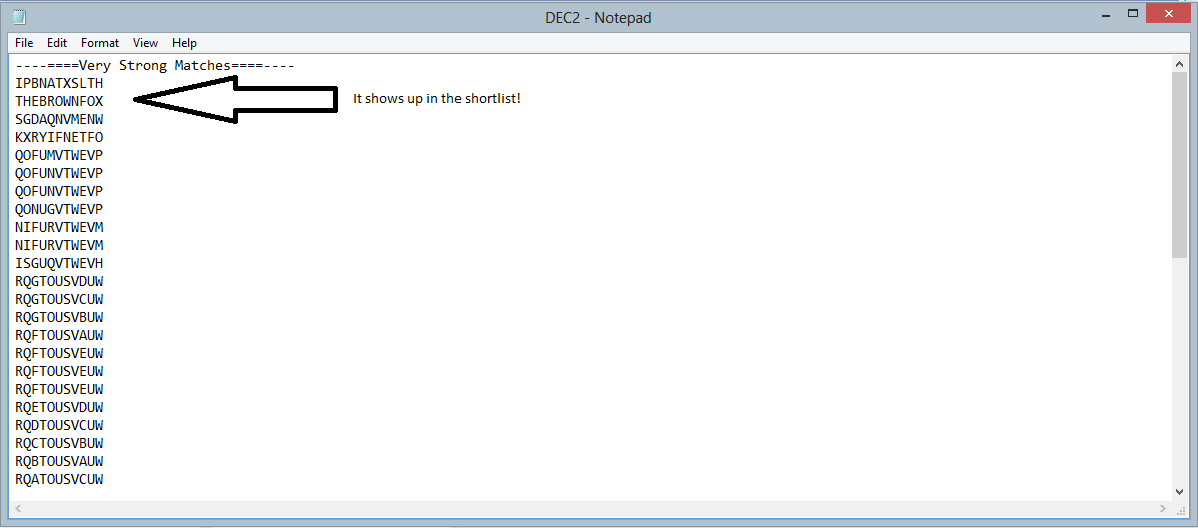

The implemented code solution for the One Time Pad was successful in allowing us to generate a list of decrypts for any given key and code. Several tests including an example shown below show the software works as intended. Users must simply put the text files in the correct places as prompted, and press a big 'get decrypts' button and the program will open up a page with all possible decrypts in a list.

The shortlist function works insofar as it will rank the decrpyts successfully, and in many test cases was able to pick a string of English words from a block of gibberish. However, these were specifically designed test cases using knowledge of the heuristics used to search for these words. With a fairly simple method to detect common words, bigrams and letters we still can't garuntee that every single possible sentence would rank higher than any given string of gibberish that may come out. For the current example of a small block of text we believe the current implementation to be sufficient to pick out a secret code, however there is much room for improvement in this method if the need ever arises for later groups.

With the software suite working we were able to begin testing to see if we could decode the message. Several things were tried:

- Using the full plaintext Rubaiyat as a key resulted in some ten thousand decrypts, of which none in the shortlist yielded results.

- Using the first letters of the Rubaiyat generated by our matcher software as a key resulted in a thousand or so possible decrypts, which again gave no positive results.

- Using the phrase 'Tamun Shud' (with an alphabet afterwards to fill the translation)yielded no results. Similarly using 'TS' with alphabet after it gave nothing.

We were also able to test some straight shift cyphers as a OTP cyper with a shifted alphabet is equivalent to a shift cypher (e.g. a OTP key 'bcdefg...xyza' is equivalent to a shift cypher where every letter is moved one to the right). Testing every possible straight shift is possible by just using two alphabets one after the other - this also gave no positive results.

One Time Pad Example

Here is a quick example of the toolkit in operation decoding a One Time Pad encrypted message. The first step is to generate an encoded message. Here we used "the brown fox" and a shifted-alphabet one time pad key of: 'zabcdefghijklmnopqrstuvwxy'. This gave us an encrypted message of "UIFCSPXOGPY".

Our input files for the decryption toolkit are the encrypted message and a section of nonsensical text which includes our original encryption key.

The decoding toolkit can then be run to "Get Decrypts". This produces a file of decrypts with every possible encryption key from the text. These keys are sets of 26 consecutive letters each shifted one letter along from the previous.

The decoding toolkit can then "Search Decrypts" for a specific keyword entered in the "Keyword to search decrypts" box or it can "Create a Shortlist" or decrypts containing common English words and letter combinations. The shortlist generated during our example contains the decrypt of the original message!

Decoding Toolkit - Testing

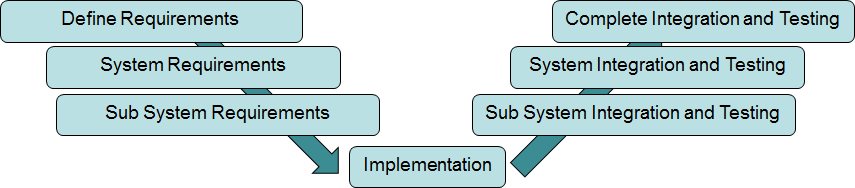

By design the toolkit grew from a collection of small matching algorithms into the complete package it is now. As such, a standard V-Testing procedure was very relevant as the testing breakdown was split into individual testing of the various modules and system level integration tests.

At a subsystem level, each of the modules incorporated were designed such that they could be individually tested. They were connected to the GUI and could take inputs using the various file readers, but were isolated from the surrounding methods and not connected into larger scope methods until their function was verified. Edge and problem cases were generated for each of the algorithms and subroutines. The individual matching and one time pad methods were verified in this way for their respective inputs on a case by case basis. As these individually were all fairly simple letter translation and string manipulating methods and sub methods a large degree of confidence can be drawn as to their individual operations.

At a system level this became more complicated. The need for very robust tools to check the interfaces between the separate modules was identified very early in the projects lifespan. Because of this, a a 'debug mode' option was supported all the way through, available as a toggle in the file menu of the GUI. When toggled on, each stage of the processes would output additional information to the terminal, allowing testing within the actual GUI of the program in real time. As the expected outputs of each stage of this process are very deterministic, this made sanity checks for each stage almost trivial and allow very easy verification of the outputs by matching examples to those done by hand, or just straight up looking at the printed outputs of what enters each stage, what it outputs and whether or not this is expected all the way through. Additional checks were hard coded to occur when this mode was toggled on (to avoid additional calculation overhead) to make sure that various strings, sub-strings and arrays that should match between sections did in fact match. In the final releases of the toolkit several of these were removed to remove the (incredibly small) cost of the debug mode check occurring each time a loop occurred (several hundred thousand times in some cases), when it was deemed the measure was not needed anymore - but many remain for future use.

In terms of ease of use, it is not expected that an end user would need understanding of the methods involved. Therefore, the readers and string handling methods handled exceptions of small or empty keys/code externally, prompting the user to replace their input rather than passing it to the actual algorithms which would output nonsense that they would have to interpret as such. This makes it easy to use. Additionally, with the use of calls for OS supported endlines and similar, the program will run on both Windows and UNIX systems.

Finally, the shortlist algorithm was the only large non deterministic part of the system and thus needed additional testing and tuning. If it was not tuned correctly it could very easily throw potentially useful decrpyts into the "weak match" category where it might not be looked at - which would be a disaster. Initially a full suite of testing was designed, however it very quickly became obvious that it was a lot easier to pick out English sentences from random strings than we first thought. From a document of a few hundred of these random strings of a message the size of our secret code, a ranking system based on just a few simple Heuristics that gave points based on common words (and, the, it, at), common bigrams (th, en, at) and common letters (vowels) would often double or triple the strings of random letters in score. As such, we simply tuned it in such a way that people would be able to go through the very strong and strong sections by hand, and are fairly confident that while our shortlist method isn't a perfect way of detecting English it is very unlikely to miss any English sentence completely.

Cypher GUI Extension

A .rar with the final cipher GUI executable can be found here: File:Cipher GUI.rar

Previous Work

The 2011 group completed a Ciper GUI with the aim of allowing fast encrypting and decrypting of several different ciphers within an easy to use GUI. Its design allows a fairly easy addition of new ciphers, making repeated tests of new ideas such as new keys or slightly different codes very easy. As well as the actual implementation of this rather large piece of software they implemented quite a few actual ciphers as part of the package - many of which were from the cipher cross-off list. However the ciphers chosen were for the most part simple ones (as I assume the actual software itself took a while to get working leaving no time for in depth look) This leaves a lot to be desired in terms of actually solving our secret code, in terms of an in depth look into which codes were frequently used at the time and which it could likely be.

Concept

With this in mind, our aim going into this section was to actually do some detailed research into which ciphers were likely to have actually been used during the time period. Here, there were no readily available computers, and we can guess that it is unlikely that the person in question had access to a mechanical method of encryption as this was rare outside military applications. This may be able to help us narrow it down to a cipher that can be used to quickly encrypt a message by hand. Indeed the messiness of the handwriting supports this hypothesis, it looks like it was done speedily. This therefore gives us a good starting point for finding, testing and then implementing a few new ciphers into the Cipher GUI that match this criteria. A small amount of information about relevant ciphers and why we chose them is added here:

Column Cipher: A transposition cipher that is well known to have been using at the end of WW2 and in the cold war due to its relative strength and its good speed of hand encryption. As a transposition cipher it is unlikely that this is a direct transform from our secret code since it would be the same letters in different places and it is difficult to make any sentences with the letters shown (or to put it technically frequency analysis shows shows the code is unlikely to be a straight transposition and more likely to be a substitution as previous groups discovered). However the fact this was used so commonly and easily leads to a chance that it was used after a substitution cipher for another layer of complexity and therefore we decided it was worth implementing.

Modified Column Cipher: Similar to above, but with a slight difference in how the transposition matrix is constructed. When a repeated letter is met in the original column cipher method, it is skipped and the next non repeated letter is used. In the modified version, the entry in the matrix is 'skipped over', or left blank, and the next non repeated letter is placed in the next available location. This leads to a larger matrix and slightly more complicated calculation but provides a similar level of encryption to the original method.

Design

The previous group implemented the code, so half of the challenge of this part was working out how they designed everything.

The design was fairly modular as a result of the way that the software was designed to handle many different types of ciphers. As such, we simply needed to design a single cipher modules with defined inputs and outputs as set by the connector.

This makes the design fairly simple, as did the use of eclipse - a free IDE that we also were using for our project - we were able to just import their project material in!. A diagram from the previous implementation is given here as figure 1, showing that our new modules need simply connect to the interface.

Implementation

Two modules were completed for the cipher GUI's operation. These are:

- Column Cipher

- Modified Column Cipher

Testing

The previous group that implemented the cipher GUI did so in such a way that each different decoding 'module' was a separate entity, talked to by a cipher interface. As we can be fairly confident in their testing and analysis of the cipher GUI as a whole, we therefore need only worry about subsystem testing in this section.

Each of the different cipher modules are completely deterministic, a set input of a key and code will give the same output encrypted message each time. Therefore, testing was a fairly simple affair. We tested edge cases for each of the separate ciphers we implemented, and made sure that the results we got matched that of the same examples done by hand or with another decoding software. Again, all of these examples were fairly trivial so detailed documentation of the edge cases is not provide anywhere, we have a high degree of confidence in the correct operation of these modules.

The Rubaiyat Matcher - Matching Frequency

Concept

With the previously designed software suite able to read in from a large block of text, parse and find all partial matches of a substring within said text, we are now able to begin to look for some more interesting data rather than just exact matches for our code.

Statistically, finding matches for small substrings (2-3 letters) of our secret code within the first letters of each word is very likely in large blocks of written text. We therefore compare the number of these smaller matches found in the Rubaiyat with the number of matches found in various other random texts (taking into account the number of words searched in each text) in order to determine if there is a statistically significant difference in the number of large matches in the Rubaiyat compared to them.

Design

The match frequency method was built by extending the partial match code already implemented - we can just use the already functional software to parse in and get the first letters of each word in a large text excerpt, and find every possible partial match. Therefore all that is required is to create a function (with GUI support) that just trivially counts the letters in each and increment a variable in an array corresponding to the numbers of letters. This made the design and inclusion of this addition to the software and GUI straightforward and easy to test, while behaving in a way that is consistent with the already designed methods.

Returning this array directly to text will allow us to graph it using external programs such as excel.

Testing

As the partial match program was already tested thoroughly, testing this module was trivial and was done by simple breakpoint testing for edge cases of possible matches.

Results

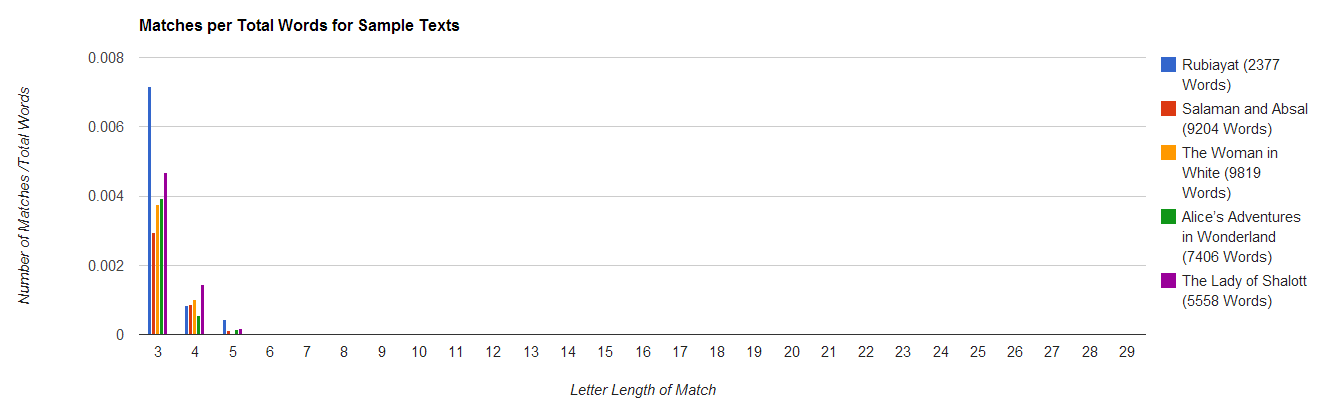

Several texts from many different sources were tested for first letter matches. Excerpts were taken from:

- Similar translated Persian Poetry book 'Salaman and Absal'

- Period specific (1842) English Poetry 'The Lady of Shalott'

- Classic English Fiction Alice’s 'Adventures in Wonderland'

- Crime Novel 'The Woman in White'

The results were fairly conclusive. None of the above contained a match larger than 5 letters long - and that is accounting for the fact that a direct match to the first letters of the code would need to be a staggering 44 letters!

Figure 3 shows each of the above sources matches graphed. Each shows a very similar pattern, with no large suspicious outliers. Therefore it seems very likely that the Rubaiyat falls within expected matches for a random text.

Conclusions

It is interesting to note that the Rubaiyat did indeed have more matches (especially 3 letter matches) than any of the other source material. The question then becomes, how many more would there be required to be a statistically significant number which would make us think to look deeper?

Since the largest match is only 5 letters long, and we would expect a 44 letter match if the code was in the Rubaiyat, we eventually decided to leave it. There is definitely the potential for extension here in the future, however we don't see these results to be exiting enough to warrant taking time from the software production.

Mass Spectrometry - The Somerton Man's Hair

Motivation

The motivation for running a spectral analysis on one of Somerton Man's hairs is to gain some clues about the environment that the Somerton Man lived in for the weeks leading up to his death. In the future these clues may help with his identification.

Mass Spectrometry Background

The spectral analysis of the Somerton Man's hair was completed using laser ablation of the hair. Mass spetrometry is the measurement of the level of different isotopes present in a sample using the unique spectrum of light emitted when the sample is burnt. In this case the sample (Somerton Man's hair) was burned with a laser and the spectral elements present recorded. Unfortunately the hair was mounted on a glass plate in this process (usually the sample would be mounted on quartz so that particles of the mounting ablated by the laser will only affect the silicon measurements). The glass plate mounting would have contained a multitude of impurities which will have affected the results of the spectral analysis. Several control data sets (from modern Australian people) were also obtained for comparison with the Somerton Man results.

Our challenge was to take the 45 isotope traces from the Somerton Man's hair and extract some clues about his surroundings before he died. The Somerton Man's hair was 6054.6 micro-metres long. This would equate to roughly two weeks of growth in an average person. The laser ablation began at the root end of the Somerton Man's hair and ended toward the free end. This means that the data represented in the graphs below shows the isotope levels in reverse chronological order from the time close to the Somerton Man's death to approximately a fortnight prior (left to right on the graph).

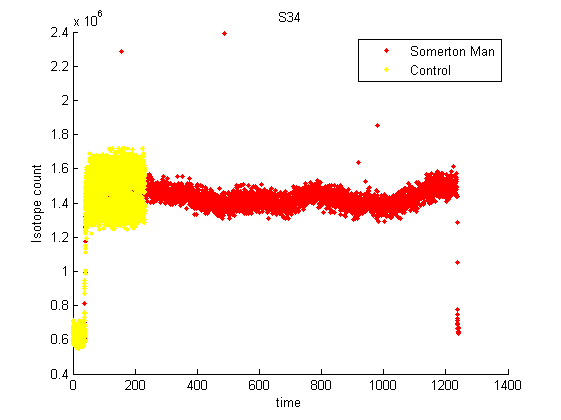

Sulphur Trail

First and foremost, we needed to understand the data from the different hairs. We needed to know what data was important and what was not. All people have relatively constant level of sulfur present in their hair. Graphing the sulphur trace of both the Somerton Man and a control, we see the levels are fairly consistent for a while but drop off suddenly at either end. This allowed us to easily see where the hair 'starts' and 'ends' - essentially allowing us to see which time slices will give applicable data for each hair.

Discarded Data

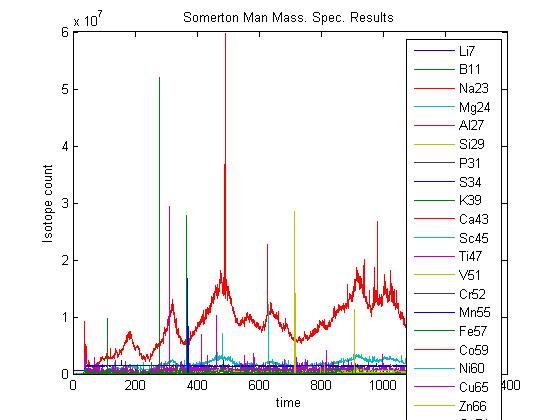

A unedited graph of all of the elements present in the Somerton man's hair is shown here. This was sorted by us to find the data of interest.

Of the 45 isotope traces only some were of interest to us in determining clues about the surroundings of the Somerton Man. Some of the traces showed errors and had to be discarded. These errors were : some of the traces were out of range for the Mass Spectrometer, other traces were flat (like the sulphur trace) and so were not of interest, and others shared the same pattern of peaks and troughs which we believe are points where the laser burnt through the sample to the glass plate below. Examples are shown below. There were also traces showing that the isotope was almost non existent in the sample. These traces we considered and discarded in the case the absence of the isotope did not give us any more information.

Results of Interest

The isotope traces we found to be of interest were: Aluminium(Al27), Titanium(Ti47), Chromium(Cr52), Iron(Fe57), Copper(Cu65), Strontium(Sr88), Zirconium(Zr90), Silver(Ag107), Tin(Sn118) and Lead(Pb206).

The graphs of the Somerton Man hair with the controls and details of the isotopes can be seen below.

Aluminium (Al27)

Although the aluminium trace appears to be within the range of the modern controls there may be some clues to be gained from this graph data. Further investigation is needed here.

Titanium (Ti47)

The titanium trace is very noisy. More investigation is required for any clues to come from this data.

Silver (Ag107)

There is a spike towards the root end of the Somerton Man's sample trace. This could be beyond the end of the hair (contamination from the glass mounting plate). More investigation is required.

Tin (Sn118)

The tin trace is very noisy. More investigation is required for any clues to come from this data.

Lead (Pb206)

The isotopes of lead present in the hair are of interest because they may give clues as to the living conditions and location of the Somerton Man over the last month of his life.

Initially we overlaid each of these graphs with the sulfur trails to confirm that the trace was all on the hair. The sulphur trace has been omitted in these graphs for clarity since the lead level falls very fast when the sulfur levels drop off anyway.

Our controls have a much smaller time slice of hair taken but we can still compare the levels assuming that the control trace remains relatively constant. We see that the Somerton Man's hair contains significantly more lead (at all times) than the control does - this could be explained by the presence of lead in construction during the late 1940's (in water pipes and paint).

The most interesting quality of the lead trace is how the level changes over time. The trace maintains a relatively constant level for the first half and then increases substantially over the second half. From this we can infer that he had a significantly higher level of lead exposure in the last week or so before his death.

Conclusions

Ideally it would be the sample should be re-analysed using a quartz mounting. Unfortunately this is impossible because the original sample was destroyed in the ablation process. The isotope traces that have been flagged by the 2013 team as of interest need further investigation as to the significance of the presence of those in particular. The 2013 team have chosen to leave this to future Code Cracking honours students (or any other interested party). The clues are waiting in the data.

The data of interest would need to be dealt with using an outlier identification and removal process. An investigation was done on one such process: the Thompson Tau Outlier Identification Method. An attempt was made by Lucy to write a matlab function to perform the outlier identification and removal but it proved time consuming. There are some matlab functions available for free on the matlab forums which claim to perform this process (identification). One of these was downloaded (Author: Michele Rienzner) but was verification was not completed by the 2013 team. This function could be verified by future honours students.

References for explanation of the Thompson Tau Method can be found in the matlab function. There is an overview of the Modified Thompson Tau method at [1] from Penn State University in the USA.

Future Work

This project will most likely be run again as an honours project for electrical and electronic engineering. Some extension from this year's project include the following.

- Implementation of additional Ciphers into the Cipher GUI.

- Addition of new codes to the Cipher Cross off List.

- Implementation of further tools to the decoding toolkit, including a basic frequency analysis suite.

- Testing of further different possible keys as One Time Pad keys.

- Addition of further possible One Time Pad decryption schemes to the toolkit, other than direct letter addition.

- Clean up the 3D digital model of Somerton Man's bust (from 2012) in order to increase probability of it being useful for image recognition software in the future.

- Investigation of the mass spectroscopy data flagged as 'interesting' by the 2013 team.

- Update the Web Crawler from 2011.

Project Management

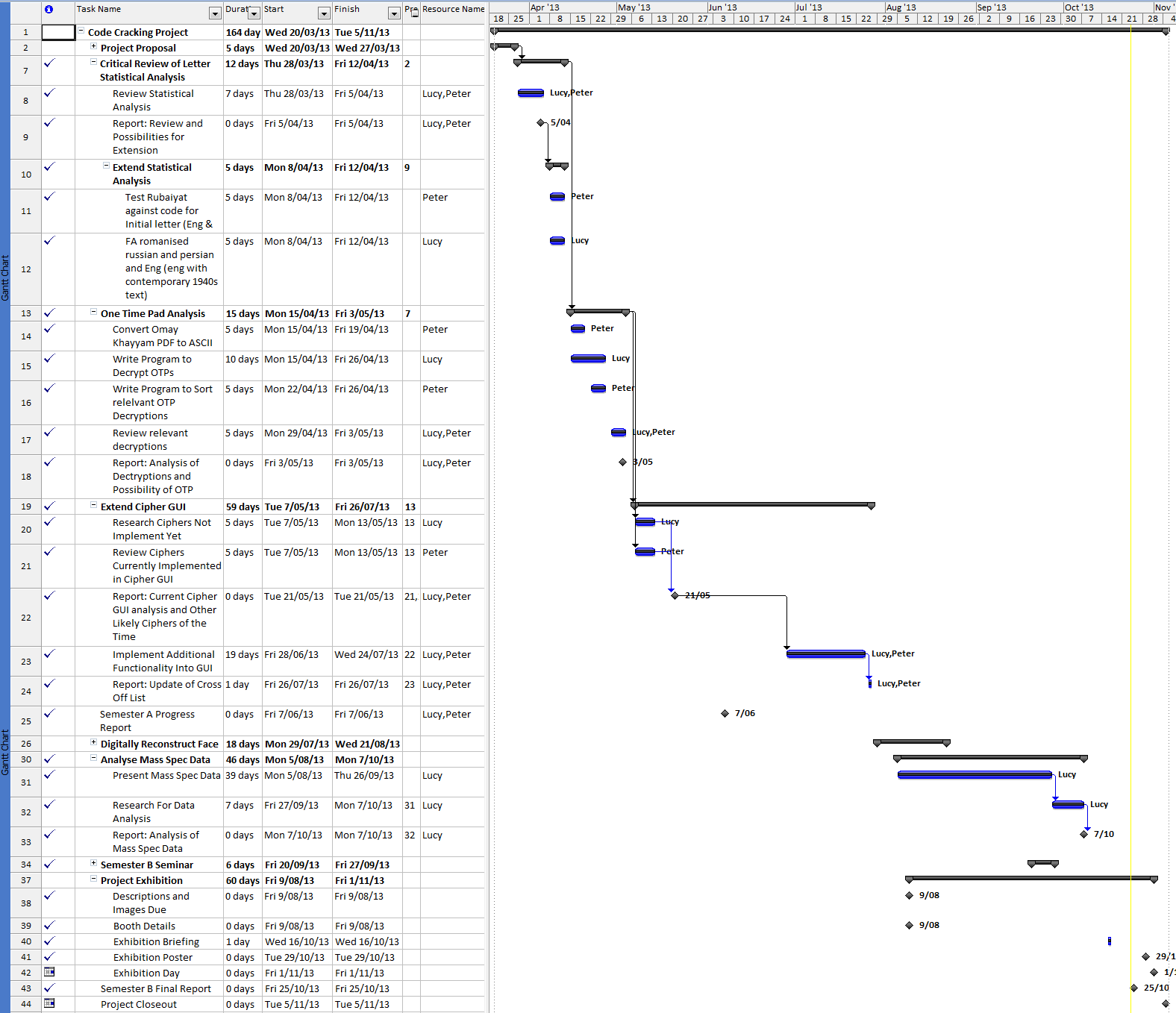

Project Schedule

Tasks

The task allocation for the project was decided by the two group members depending on their confidence in the respecive areas. Peter took on most of the coding work, while Lucy ran the analysis of the frequency and mass spec data and drew conclusions.

A detailed breakdown of the task allocation and completion is shown here:

For the course of the project, a Gantt chart was developed and followed. As the different tasks in the project were in flux, the chart changed several times throughout the course of the project. This allowed time to be allocated to where the members felt the best results could be obtained, and dead-ends and tasks that would take too long were 'dropped off' the edge to allow time. Additionally, when tasks began to take longer than expected or extensions were thought up we had the freedom to decide what was best for the project outcomes as a whole and change the timetable to suit. An example of this is the frequency analysis section. Both the genre and line length analysis was thought up at the end of their respective time periods as extensions to completed work. We extended the timetable to allow space to do these two tasks.

Gantt Chart

A detailed breakdown of the task completion during the project is available here in this Gantt chart.

Budget

As of the end of this project, we have had no need of the budget allocated to us by the School of Electrical and Electronic Engineering.

Risk Management

A full detailed risk assessment analysis was done pending the start of the project. This lead to a strategy followed throughout the project life-cycle. As this document was very large it will be kept separate from this page, and available as a pdf linked here:

File:RiskAssessment2013.pdf File:PlantRiskAssessment2013.pdf

Project Deliverable and Closeout

The deliverables at the end of the 2013 project are as follows:

- Software

- Updated Cipher GUI (File:Cipher GUI.rar)

- Decoding Toolkit (File:DecodingToolkit.rar)

- Results

- Conclusions drawn from frequency analysis data on this wiki

- Mass Spectronomy Graphs

- Supporting Documents

- Text document on operation of Cipher GUI

- Text document on operation of Decoding Toolkit

- Video on the operation of the toolkit

- Video on the project

- Additional Material

- Project Poster

- Project Wiki

- Gantt Chart and Risk Assessment

- Presentations

- Initial and Final Seminars

- Project Exhibition and Demonstration

The material delivarables listed above are all available here on the wiki. Software and packaged graphs are available as links to .rar file packages in the See Also section below.

Conclusion and Summary

The 2013 Cipher Cracking group is satisfied with the level of completed deliverables at the end of the project lifecycle. The code cracking effort has been greatly furthered by the creation of the new decoding toolkit, addition of one time pad testing tools and extensions to the cipher GUI. Future groups will find these tools invaluable in furthering the testing done next year. Some interesting conclusions were drawn from the frequency analysis data using the new toolkit and furthering previous years efforts with larger sources of text. Future groups now be very confident that if the code is the first letters of language it is very likely to be English. Finally there is a lot of collected data from the mass spectronomy section. The conclusions drawn while sorting and graphing the data were interesting. Future groups may further extend this by researching the meaning behind the compounds isolated and graphed by this project.

While we still do not know the identity of the man nor the meaning of the code, the 2013 group is happy with the progress this year and look forward to hearing of any findings in the future.

See also

- Cipher_Cracking_2013/Rubaiyat_Plaintext

- File:DecodingToolkit.rar

- File:Cipher GUI.rar

- File:PlantRiskAssessment2013.pdf

- File:RiskAssessment2013.pdf

- Cipher Cracking (main page)

- Cipher Cracking 2013

- Cipher Cross-off List

- Timeline of the Taman Shud Case

- List of people connected to the Taman Shud Case

- List of facts on the Taman Shud Case that are often misreported

- List of facts we do know about the Somerton Man

- The Taman Shud Case Coronial Inquest

- Primary source material on the Taman Shud Case

- Secondary source material on the Taman Shud Case

References and useful resources

- The taman shud case

- Edward Fitzgerald's translation of رباعیات عمر خیام by عمر خیام

- Adelaide Uni Library e-book collection

- Project Gutenburg e-books

- Foreign language e-books

- UN Declaration of Human Rights - different languages

- Statistical debunking of the 'Bible code'

- One time pads

- Analysis of criminal codes and ciphers

- Code breaking in law enforcement: A 400-year history

- Evolutionary algorithm for decryption of monoalphabetic homophonic substitution ciphers encoded as constraint satisfaction problems

- Copy of The Advertiser from 1948 for Letter Frequency Analysis: English